Longtime Rockwell Automation partner, Stratus Technologies’ own Frank Hill, joins Bryan and Tom to talk edge computing in industrial automation

Category: Virtualization

ThinManager version 4.1 and higher supports many virtualization management features used in VMWare’s VSphere Client software. Virtual sources are managed in the same easy-to-use ThinManager tree as other sources liek terminal servers, thin clients, IP cameras and more.

ep22 The Plant » Training The Future Super Users of ThinManager, with Todd Garmon and Tom Jordan

Bryan Harned and Todd Garmon discuss challenges faced from the System Integrator down to the end-user when using and learning ThinManager.

ep21 The Plant » Application Container Technology Shines Bright in ThinManager 12.1 release, with Nick Neppach

Bryan Harned and Nick Neppach talk ThinManager 12.1, the expansion of integrated application container technology, and why it’s a real game changer.

System Integrator Uses Thin Client Technology to Centralize Control of Disparate Machines and Systems

Growth, for any business, is always a good thing. But even good things have challenges. When a growing food and beverage customer found that too many new machines combined with their existing controls system were making work harder, they called on Everworks, Inc. to streamline their facility.

The customer had acquired multiple machines and new automation equipment as part of their growth strategy. Each new machine came with an OEM solution that is typically a stand-alone FactoryTalk View ME PanelView or SE Local Station. The SE instances ran on industrial computers that required continuous updates and expensive maintenance. What the customer needed was a strategy to integrate 4 OEM systems with their existing native controls to create one modern, fully automated, large food processing line.

Stratus Technologies on the Cutting “Edge”

https://www.cio.com.au/mediareleases/32706/new-zero-touch-edge-server-addresses-demand-for/

A Touchy Subject

: Touchscreen Technology Bringing the World to Our Fingertips

They can bring you a world of information and services at the touch of your finger. Touchscreens on electronic devices allow almost anyone to control and operate digital gadgets with a mere tap. Let’s take a look at how touchscreens work and the ways they are changing our present and our future.

How Touchscreens Work

There are two basic types of touchscreens: resistive and capacitive. (1)

=&0=&

Resistive touchscreens are the most common type of touchscreens. They’re typically found at ATMs and kiosks that distribute movies on DVR, etc.

How it works

The screen consists of two electronic layers. Press your finger on the screen and the flexible top layer (glass or plastic) touches the one on the bottom, creating an electronic current that sends a message to the software inside.

=&1=&

Capacitive screens are generally found on the devices we now use everyday: smartphones, electronic tablets, GPS navigation, etc.

How it works

Capacitive screens have electric sensors, which react to the natural AC electric current that runs through the human body. When a command is selected, the device’s AC current combines with our own AC current and the circuit is completed. Microcontrollers complete the command.

Brief History of Touchscreens

How have we gotten in touch with this technology? (2)

1965 – First finger-driven touchscreens invented by E.A. Johnson

1970 – Dr. G. Samuel Hurst invents the first resistive touchscreen

1982 – First human-controlled multi-touch device developed at University of Toronto

1983 – Hewlett Packard releases the HP-150, one of the first touchscreen computers.

1993 – The first touchscreen phone, the Simon Personal Computer, is launched by IBM and BellSouth. Apple also released the Newton touch-sensitive PDA

2002 – Sony SmartSkin introduces mutual capacitive touch recognition

2008 – Microsoft introduces the Surface tabletop touchscreen. It could recognize several touchpoints at the same time.

2011 – Microsoft and Samsung introduce PixelSense technology, in which an infrared backlight reflects light back to sensors that convert it into electronic signal.

At Your Fingertips

There are a growing number of electronic devices that use touchscreen technology, with many more uses on the horizon (percentage of Americans using devices in parenthesis): (3, 4, 5, 6)

Smartphones (64%)

Tablets (42%)

eBook readers (32%)

Portable game devices (35%)

Automobiles/GPS navigators (30%)

Computer monitors (78%)

Shipments of touchscreen panels for devices (7)

2012: 1.3 billion

2013: 1.8 billion

2016*: 2.8 billion

*Projected

Touching on the Benefits … and Drawbacks

=&2=&

There are distinct advantages to touchscreen technology. (8, 9)

Speed

Touchscreens are faster. With a trackball or mouse, the user has to locate the cursor, then position it to compete a task. Touchscreens react instantly to the point of contact.

Ease of use

Touchscreens are more intuitive, allowing the user to simply point to control it.

Size

Touchscreens allow devices to become smaller, since they combine data entry with the display. No need for keyboards, cords, etc.

Accessibility

Touchscreens make it easier for those with disabilities to use computer technology. For example, people with mobility issues can simply use a finger or a stylus.

Creativity

Touchscreens can allow artists to “draw” directly on the screen.

=&3=&

Touchscreens, however, do have some drawbacks. (10)

Human touch needed

Touchscreens require direct contact from the user’s skin to activate. Therefore, the devices cannot detect touches from users wearing gloves (though special touch-sensitive gloves have been developed).

Orientation

Most touchscreens (phones, tables, etc.) are used vertically, unlike computer screens. Some think that makes computer screens incompatible with touchscreen technology.

Method of usage

Personal touchscreens devices are generally held close to the user. Computer screens are usually several feet away. The user has to reach to use them and arm fatigue can set in.

Wear and tear

Bigger touchscreens mean shorter battery life. Also, they may be hard to keep clean.

Future of Touchscreens

While touchscreen technology has made smartphones and tablets widely popular, new devices using touchscreens are on the horizon: (11, 2)

Home appliances

Touchscreens on refrigerators and washing machines/dryers can give vital information and even deliver the day’s news!

Video games

Some video game makers are already ditching button controllers for touchscreens that allow players to tap their way to victory.

Feel

Researchers are working on touchscreens that use “microfluid technology” to create buttons that “rise up” from the surface and touchscreens in 3-D.

Source: http://www.computersciencezone.org/touchscreen-technology/

What Is ThinManager?

As with most high tech companies, the answer is not always so easily explained without creating even more questions. “We are the global leader in thin client management and industrial mobility solutions”. Sure, it rolls off the tongue, but does that explain ThinManager to someone that does not know about factory automation, thin clients or industrial solutions?

We have developed a new video to quickly and concisely illustrate an overview of what ThinManager is and does. This is the first in a new series of videos offering a more “in-depth” look in to the core functionality of ThinManager.

MailBag Friday (#43)

Every Friday, we dedicate this space to sharing solutions for some of the most frequently asked questions posed to our ThinManager Technical Support team. This weekly feature will help educate ThinManager Platform users and provide them with answers to questions they may have about licenses, installation, integration, deployment, upgrades, maintenance, and daily operation. Great technical support is an essential part of the ThinManager Platform, and we are constantly striving to make your environment as productive and efficient as possible.

Every Friday, we dedicate this space to sharing solutions for some of the most frequently asked questions posed to our ThinManager Technical Support team. This weekly feature will help educate ThinManager Platform users and provide them with answers to questions they may have about licenses, installation, integration, deployment, upgrades, maintenance, and daily operation. Great technical support is an essential part of the ThinManager Platform, and we are constantly striving to make your environment as productive and efficient as possible.

Could you please advise me what configuration is required on a Windows PC to allow for a WinTMC session to span multiple monitors? We found the configuration on the thin server but still can’t get the WinTMC session to maximize across both monitors on the client system.

It is almost the same process as setting up MultiMonitor for a thin client. In the terminal configuration wizard select Enable MultiMonitor, select the resolution of your monitors, and setup the layout of the sessions. If you choose the resolution that your monitors are running at it should open full screen across both monitors once it’s received its configuration.

Monthly Integrator Spotlight

The Desire for Virtualization Drives ThinManager Centralized Management in Ireland

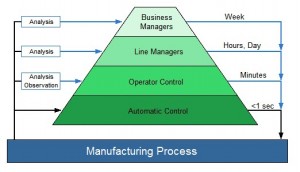

For decades, industrial automation in North America has seen consistent growth in both volume as well as technological development. In many parts of the world, however, there has been a slower adoption rate of these new innovations in the manufacturing sector. One such innovation, Virtualization, has forced companies to reexamine their stance on waiting to adopt new technologies.

Enter NeoDyne, located in Cork, Ireland. Specializing in creating production performance monitoring and process improvement solutions, this fifteen year old system integration firm has plunged into these new technologies head first as they continue to modernize facilities across Ireland. One of their current deployments is a Manufacturing Information System (MIS) at the main processing facility owned and operated by a global leader in the cheese and whey protein market.

Martin Farrell, the Automation Director at NeoDyne, explained to us how their customer’s desire to virtualize brought them to adopting the ThinManager Platform. “The IT managers at their main facility had been trying to support all their standalone SCADA systems for the last 20 years. Very early on in the planning process they decided they wanted a virtualized environment without physical servers sitting on the plant floor. They were looking to bring all of their applications into a VMware virtualized environment that would deliver their applications to the plant floor. At that point we advised them to adopt thin clients to replace their standalone PCs.”

It became obvious to the team at NeoDyne that updating the network infrastructure while implementing a Wonderware System Platform based architecture in a virtualized environment was going to require a centralized management solution. “We had limited experience with Terminal Services and thin clients before this deployment but knew that this was the direction we had to go. We did research, read a lot of articles and found ThinManager. It has turned out to be a fantastic product that allowed us to tie everything together. It is an intuitive software platform that makes configuration and management easy,” said Martin.

Once deciding to implement all of these platforms, the first step was to tackle the challenge of integrating them with their planned thin client deployment. Because the facility had three separate areas for cheese production, ingredients, and utilities, they decided to construct a network architecture using dual redundant I/O servers in each area from Solutions PT. “The facility is still very much a Unit based production outfit. Even though it is an automated plant, they still have a model of individual units and each unit requires its own dedicated control room. At the control level, it was a mix and match of everything, but we did put together a very organized structure on what had previously been a disparaged group of PLC platforms from Siemens to Allen-Bradley to Mitsubishi to ABB. ”

Once the architecture was firmly in place, Martin and the team at NeoDyne started applying a multitude of ThinManager Platform features to simplify everything and make the system more efficient. “We have 2 ThinManager Servers set up in a mirrored configuration so if a thin client for any reason fails to connect to one, it automatically connects to the other. If we go home at the end of the day and one of the servers fails, we know they won’t be in the dark and we have time to get it back online with minimum disruption to the operations.”

Martin then explained some of the other ThinManager Platform features they decided to take advantage of. “We deployed AppLink to deliver client sessions so the operators only have access to the InTouch Application without having to click around a Terminal Services session via the desktop to get to their SCADA application. We also use it to launch a particular application so if the session crashes it will automatically reboot the application.”

NeoDyne, like many others, also uses the ThinManager Shadowing feature to provide off-site assistance by being able to remotely log into an operator’s user session and guide them through problems in real time without having to be in the facility. However, they are also using it to reduce licensing overhead costs for their customer as well. “Something else we have done in the facility is to allow an operator to go between two control rooms without using additional licenses via the Terminal to Terminal Shadowing feature. We can have one session that is shadowed between two control rooms and depending on which Control Room he is in, he can take ownership of either application. These “part time” thin clients that are used infrequently can identify as a shadowed thin client to avoid purchasing additional licenses to maximize cost efficiency.”

Their next goal was to find a way to allow the facility managers and supervisors to view the application without needing to travel through the facility. ThinManager WinTMC made that a simple task without having to complicate the proposed facility network architecture. “The main benefit of deploying the WinTMC feature is that it allows the plant managers and supervisors to access the application session on their desktop PCs to monitor plant performance and switch back and forth without needing additional hardware. Also, as the PCs on the floor die they can replace them with thin clients instead of PCs as part of a continuing maintenance budget. It gives them flexibility on how and when they buy more hardware.”

Now, more than a year since they began this project, we asked Ciaran Murphy, Automation Systems Lead for NeoDyne, how further deployment of ThinManager has been unfolding at the facility. “What we have found is that using ThinManager to manage the thin client setup and the actual thin clients themselves down on the plant floor is allowing us to gradually retire more and more of their SCADA clients. Their existing control systems and standalone SCADA systems are actually still there. We put in a Manufacturing Information System (MIS) over the top to analyze plant performance. Now, having seen the benefits of this technology, they will gradually replace their existing Thick clients on the Plant Floor with Thin Clients. Over the next few years they will continue to replace the rest of the standalone PCs on the floor and just be left with thin clients.”

Now, more than a year since they began this project, we asked Ciaran Murphy, Automation Systems Lead for NeoDyne, how further deployment of ThinManager has been unfolding at the facility. “What we have found is that using ThinManager to manage the thin client setup and the actual thin clients themselves down on the plant floor is allowing us to gradually retire more and more of their SCADA clients. Their existing control systems and standalone SCADA systems are actually still there. We put in a Manufacturing Information System (MIS) over the top to analyze plant performance. Now, having seen the benefits of this technology, they will gradually replace their existing Thick clients on the Plant Floor with Thin Clients. Over the next few years they will continue to replace the rest of the standalone PCs on the floor and just be left with thin clients.”

Now that ThinManager is efficiently driving the facility systems, we wondered what is next for the team at NeoDyne. Ciaran was more than happy to tell us. “With the success of this project, we showed other clients what ThinManager could do and are already deploying it into another ongoing project we are involved in with another major dairy here in Ireland who was impressed by the product. Going forward, all of our future platform solutions for the next 5, 10, 20 years will have ACP ThinManager Platform as a fundamental part of our standard system designs and we will actively propose it. To us there isn’t even a choice; if the site allows it, we will use ThinManager.”

ABOUT NEODYNE: NeoDyne Plant Information Management solutions enable end user Lean Manufacturing, Process Performance Improvement, Overall Equipment Effectiveness, and Cost Improvement business transformation initiatives. The NeoDyne solution is specifically tailored for milk / food processing and combines features to manage and provide traceability for food batch manufacturing in continuous/batch processes. Plant automation and Quality/LIMS and ERP systems are joined into one unified solution.

__________________________________________

To review cost savings of using the ThinManager Platform, visit our ROI Calculator here.

To read about successful ThinManager Platform deployments, visit here.

To see when the next ThinManager 2-Day Training Session is being offered, visit here.

Does Virtualization Really Provide ROI?

Heading into 2013 the hot topic around the IT water cooler seems firmly focused on the “Virtualization War” being waged between Microsoft and VMware. There are arguments to be made on both sides of the debate and who will come out on top is anyone’s guess at this point. Microsoft has a history of coming to market late with a product, only to devour the entire market with a low price point. While Microsoft looks to finish buying out the current Virtualization Market, VMware has seemingly decided to ignore Virtualization as an “end game” and continues to push past it towards full cloud adoption to compete as a service to rival Microsoft Azure.

Heading into 2013 the hot topic around the IT water cooler seems firmly focused on the “Virtualization War” being waged between Microsoft and VMware. There are arguments to be made on both sides of the debate and who will come out on top is anyone’s guess at this point. Microsoft has a history of coming to market late with a product, only to devour the entire market with a low price point. While Microsoft looks to finish buying out the current Virtualization Market, VMware has seemingly decided to ignore Virtualization as an “end game” and continues to push past it towards full cloud adoption to compete as a service to rival Microsoft Azure.

As is often the case in these full scale technology wars, it is often the needs and wants of the end user that are ignored while an entire industry stays solely focused on a battle between goliaths. Regardless of who manages to lure away each other’s executives, or which smaller firms are bought out to provide a deeper arsenal and better market positioning, it is our contention that regardless of who wins, ThinManager is still a superior technology for managing virtualized environments.

As noted in our previous article comparing ThinManager to Citrix, as well as the discussion about where real return on a thin client system can be found, we must begin with the often ignored factor of a platform being able to manage the end device. While widely regarded as an industry leader in the advancement of managing Virtualized environments, VMware View falls short of the mark as it relates to client management due to its managed images needing clients that require an operating system. Any platform that requires an end user OS also requires time and resources being expended to keep those devices updated and operating properly.

So while VMware and ThinManager both operate on a “one to many” ratio, ThinManager requires far less resource and hardware management, which is a seemingly forgotten piece of the puzzle when determining ROI. After all, true ROI must be measured by the total cost of labor hours as well as hardware and licensing costs.

So while VMware and ThinManager both operate on a “one to many” ratio, ThinManager requires far less resource and hardware management, which is a seemingly forgotten piece of the puzzle when determining ROI. After all, true ROI must be measured by the total cost of labor hours as well as hardware and licensing costs.

Taking this comparison one step further, the Centralized ThinManager solution continues to pay bigger dividends every time an end device is replaced or added to the architecture of a facility. In a ThinManager environment, that device only needs to be plugged in, and it is instantly configured as the configuration is maintained at the server. In a VMware environment, that device has to be manually configured before it can share data and deliver applications, requiring even more resource expenditure before being operational. And the more clients you have, the more time will have to be spent performing maintenance and configuration in a VMware environment.

Yes, there are things that VMware brings to the table that are proprietary and unique to their platform such as PCoiP Protocol. However, it is an equivalent trade off, at best, for its lack of RDP and ICA Protocol. Add to that VMware lacks tiling support, client-to-client shadowing, session shadowing, MultiSession, and inability to connect to other non-VMware virtual machines across a network and one must begin to question the assertion that VMware is the answer to all things related to managing a virtualized environment.

Lastly, the lack of VMware support for both applications and management of Terminal Services / Remote Desktop Services (both of which are supported by ThinManager) creates a very large problem as most network facilities in the modern industrial landscape use Windows Server in some capacity. It is one thing to compete against Microsoft in the battle for virtualization dominance, but it is another thing entirely to ignore the most widely adopted and used operating system on the planet.

Lastly, the lack of VMware support for both applications and management of Terminal Services / Remote Desktop Services (both of which are supported by ThinManager) creates a very large problem as most network facilities in the modern industrial landscape use Windows Server in some capacity. It is one thing to compete against Microsoft in the battle for virtualization dominance, but it is another thing entirely to ignore the most widely adopted and used operating system on the planet.

While VMware and Microsoft are locked on each other with tunnel vision to the exclusion of the rest of the global landscape, there are more and more viable options for creating an efficient and well managed virtual environment…and ThinManager is on the top of that list. After all, true Return on Investment comes from the money and resources you don’t have to expend while ensuring the least possible downtime for your facility operations.

___________________________________________________________________

To use the ThinManager ROI Calculator, visit here.

To read about successful ThinManager Platform deployments, visit here.

To see when the next ThinManager 2-Day Training Session is being offered, visit here.